Creating Custom AI Applications for Student-Oriented Conversations

By Erin Frazier, Euan Bonner, Ryan Lege, Kanda University of International Studies

Introduction

Artificial intelligence (AI) is becoming ubiquitous and intertwined with everyday life, especially with the proliferation of Intelligent Personal Assistants (IPAs) such as Siri, Alexa, Cortana, and Google Assistant, among others. These AI-assistants are designed to naturally interact with us and answer all our queries in a realistic and human manner. At the Research in Experimental Education Lab (REE Lab) at Kanda University of International Studies, we are always looking for ways to integrate new technology into language classrooms and saw that IPAs have immense potential as a tool for language learners due to their processing of natural language. While considering this, we discovered that there are accessible tools that allow users to create their own AI-powered conversations. As a result, we carried out the following activity exploring how a student-created IPA application can act as an intermediary between peers and allow for asynchronous communication.

AI has been used in the classroom in previous studies as a way for students to practice speaking skills, and learners have expressed a positive attitude towards using the technology (Obari & Lambacher, 2019). To date, most studies utilizing AI for language learning have focused on student experiences with IPAs such as Amazon’s Alexa, Apple’s Siri, or Google’s Assistant. Goksel-Canbek and Mutlu (2016) suggest that AI can be effectively used to enhance listening and speaking skills. Dizon (2017) notes that the use of automatic “speech recognition, which is the technology that allows users to speak and be understood by IPAs and other related software… may have a positive impact on the improvement of L2 skills, especially in terms of the development of pronunciation” (p. 813). Luo (2016) even found that pronunciation instruction using automatic speech recognition was as effective and sometimes more effective than human instruction.

However, one common concern with IPAs is their inability to understand accented speech. Recently, some studies have highlighted that the technology has improved to a degree that minimizes this problem. Moussalli and Cardoso (2019) conducted a study using Amazon’s Alexa IPA and found “that the adopted IPA adapts well with accented speech. In addition, it exposes learners to oral input that is abundant and of good quality, and provides them with ample opportunities for practice (both input/listening and output/speaking) through human-machine interactions” (p. 19). The studies mentioned above testify to the validity of using IPAs for language learning. IPAs are a potentially powerful tool for language learning, but unfortunately are limited by the interactions they have been programmed with. Currently, they don’t have the knowledge or focus to specifically address the needs of language learners. To reap the benefits of this technology, learners need to strategically approach the IPA with a goal in mind or teachers need to scaffold tasks for learners. In our case, we decided to take a scaffolding approach and investigate the benefits of involving learners in the creation process of an IPA application designed specifically to meet learner needs.

Google’s IPA creation tool Dialogflow is a web-based platform where users can create their own conversation-based applications for IPAs such as Google Home. Users start by creating a list of questions highlighted with important keywords and then add a variety of responses that react to those keywords to identify the users intent and fulfill tasks. It requires no coding knowledge and is quite easy to understand and start using. All applications created with Dialogflow can be tested on the spot by uttering a special activation phrase to your IPA to test as you go.

Using Dialogflow, we introduced the idea of programming AI systems to language learners at a Japanese private university. Conducted during orientation, this activity allowed first-year university students to asynchronously ask their second-year peers questions about the school and student life through a customized IPA application.

The Class Activity

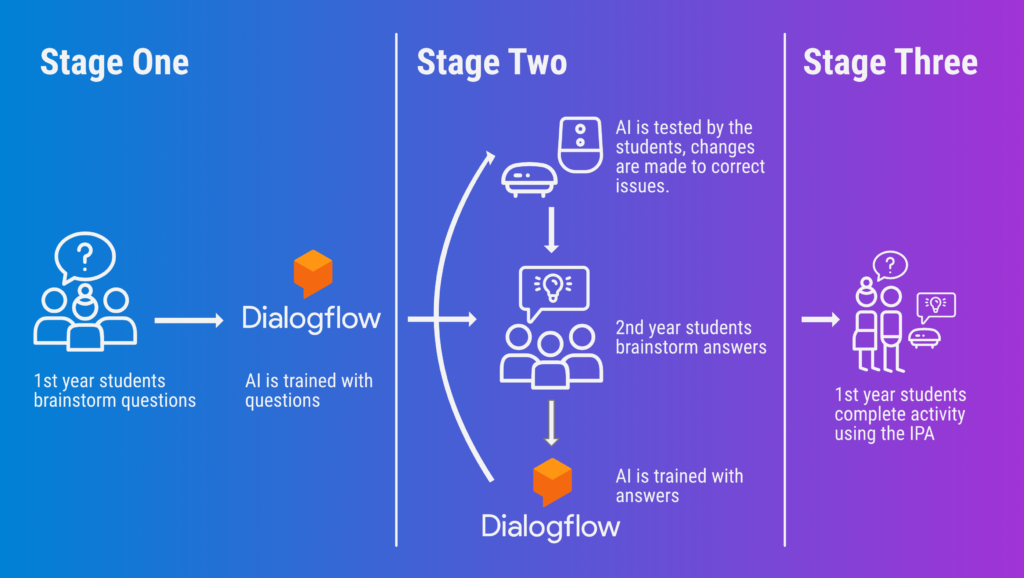

For this project, we decided that using an IPA would be a perfect way for a first-year class to become familiarized with the campus and get advice from a second-year class. The activity was broken down into three stages, ending with an AI conversation-based activity. In stage one, first- year students generated questions and their variants to train the IPA. In the second stage, second-year students followed a circular process of generating input, programming through Dialogflow, testing AI comprehension, and refining input to develop the IPA application. This led to the third stage, in which the first-year students conversed with the AI in a conversation-based activity (see Picture 1).

The First Stage

We started with a first-year class during orientation. On our campus we have a specialized facility to aid learners in self-study, but using the facility can be complicated. We had the first-year class first walk around the facility and look for any areas they were interested in using or did not know how to use. After that, the learners were asked to formulate questions about the area with multiple variations of each query for the second-year students. This step was important because when you program IPA applications, the AI does not look at grammar or sentence structure when asked a question. Instead, it looks for keywords and the action you wish the system to perform. This is especially important when working with language learners, as they may not have the linguistic competence to communicate their meaning using a variety of syntactic structures (e.g. What is your favorite flavor of ice cream? What kind of ice cream do you like? Which flavor of ice cream do you prefer? Which kind of ice cream is your favorite?).

The Second Stage

Following the generation of questions with the first-year students, we carried out the next phase of the project with our second-year students. First, we began by introducing the concept of AI and its potential uses. We then allowed students to interact with a Google Assistant-enabled device to better understand how interaction takes place with an IPA. Following this, students brainstormed ways they could use IPAs to help other people. This led nicely into an introduction to the project. To help our first-year students easily get information regarding university facilities, our second-year students worked on creating an IPA application specifically tailored with advice and useful information. The second-year students brainstormed answers to the first-year students’ questions within a shared online document. Following a peer review of the answers, we then helped the students add their responses into Dialogflow to train our IPA application. The second-year students then tested the custom assistant on the spot. If the responses were not satisfactory, they made corrections, and repeated the process. Due to the cloud-based nature of Dialogflow it was possible for students to make changes on the fly, without having to download or update the application. Finally, the second-year students were satisfied with their custom IPA application and it was ready for the next step, supporting first-year students.

The Third Stage

During the next lesson, our first-year students got to try the IPA application out in our facilities on campus. We gamified the activity by creating a scavenger hunt involving both Augmented Reality (AR) tasks and our custom IPA application. The activity began in the classroom. Students in pairs were given a list of scrambled words of the locations in the facility (e.g. front desk: orndtksef OR peer advising: viiprgdaenes). Once they unscrambled one of the locations, the students came and told us their answer. If the answer was correct, they were given a task to complete at the location in which they would interact with either the AI for a conversation (see Picture 2) or AR for a video explanation (see Picture 3).

Task 1: (AI) Go to the location and speak to the Google Home.

| You: | To start Google Home say:

“Hey Google, talk to senior students” |

|||

| Google: | Sure here’s the test version of senior students. What do you want to know? | |||

| You: | Ask one of the questions below in each of the different areas. Write down the advice.

|

|||

| You: | Say (Good bye) when you are finished with the area. |

Table 1 – Example of AI task handout that students filled in

Task 2: (AR) Watch the AR video and answer the following:

| 1. Who are 3 people you can talk to here? |

| 2. Conversation |

| a. What do you say first? _____________________________________________ |

| b. Question 1 ______________________________________________________ |

| c. Question 2 ______________________________________________________ |

| d. End of conversation _______________________________________________ |

| 3. Task: |

| a. Question 1 answer: _______________________________________________ |

| b. Question 2 answer: _______________________________________________ |

Table 2 – Example of AR task handout that students filled in

Discussion

This activity taught us a lot about how AI works and how it could be used for language education. We will focus on some key issues to be aware of when bringing an IPA into a language learning environment. In the following discussion, we will highlight specific language needed when utilizing AI-based conversations. Also, we will look at practical issues like the environments in which the AI devices are placed. Finally, we will explore other possible activities to bring AI into the classroom.

The Language of AI-interactions

When developing your own AI-based conversations, it is important to know how the system is processing natural language. Dialogflow uses two types of keywords: entities and intents. Entities are primarily noun-based keywords that the user is talking about such as “chocolate ice cream,” and intents are what the user wants to do with the entity, for example “How much is the chocolate ice cream?” As a result, AI is very forgiving of grammatical mistakes students may make—as long as the keywords such as “how much” and “ice cream” (or synonyms) are heard. In professional IPA applications dealing with complex sentence structures, creators will often train the AI with at least 10-20 variations of phrases it is likely to hear. In our case, since the students were second-language learners and focused on simple question/answer interactions, 3-4 variations on each phrase was usually sufficient.

As a result of the way AI understands human speech, IPAs do not pay any attention to small errors, meaning that they are unlikely to help students realise their own mistakes unless they are keyword-based. Saying, “Put alarm in 6am” is likely to be understood just as well as “Could you please set the alarm for 6am?” However, this is also true when dealing with native speakers, if a language learner’s intent is understood, the interaction can still proceed smoothly.

Strategic Instruction

There are a few things to consider before implementing this kind of activity in your own learning environments. IPAs are designed to be intuitively interacted with using conversational language, however, even native speakers need to learn how to interact with IPAs, a need that is further complicated for language learners. Hence, teachers need to provide students with strategic instruction when working with IPAs.

Fluency and Unfilled Pauses

Fluency is key. Because the AI is only listening for contextual keywords, it does not know when a speaker has finished speaking, other than through silence. Language learners will often pause mid-sentence to consider and select the next correct word. However when this happens, the IPA will take what the user has said so far, try to interpret the entities and intents, and provide an immediate reply, which can frustrate students who are still trying to speak. Teachers should make sure students know to formulate their entire question before speaking.

Wake-up Phrases and Visual Cues

Some students will have never interacted with an IPA before, and each and every type of IPA has a unique wake-up phrase to activate the IPA. For instance, our wake-up phrase for this project was “Hey Google, talk to senior students”. Students also need to know what visual cues the IPA provides to show that it is listening, such as a flashing or colored light. There is nothing more frustrating for a student than carefully considering what question to ask and how to ask it, saying it, and then discovering that the AI wasn’t even listening.

Environmental Placement

Speech recognition is improving by leaps and bounds and has made great improvements in understanding what people are saying in noisy environments. However, there are, of course, limits to the level of background noise and the distance students can be from the device. Make sure students speak loudly, clearly, and within a reasonable distance of the IPA.

Future Directions

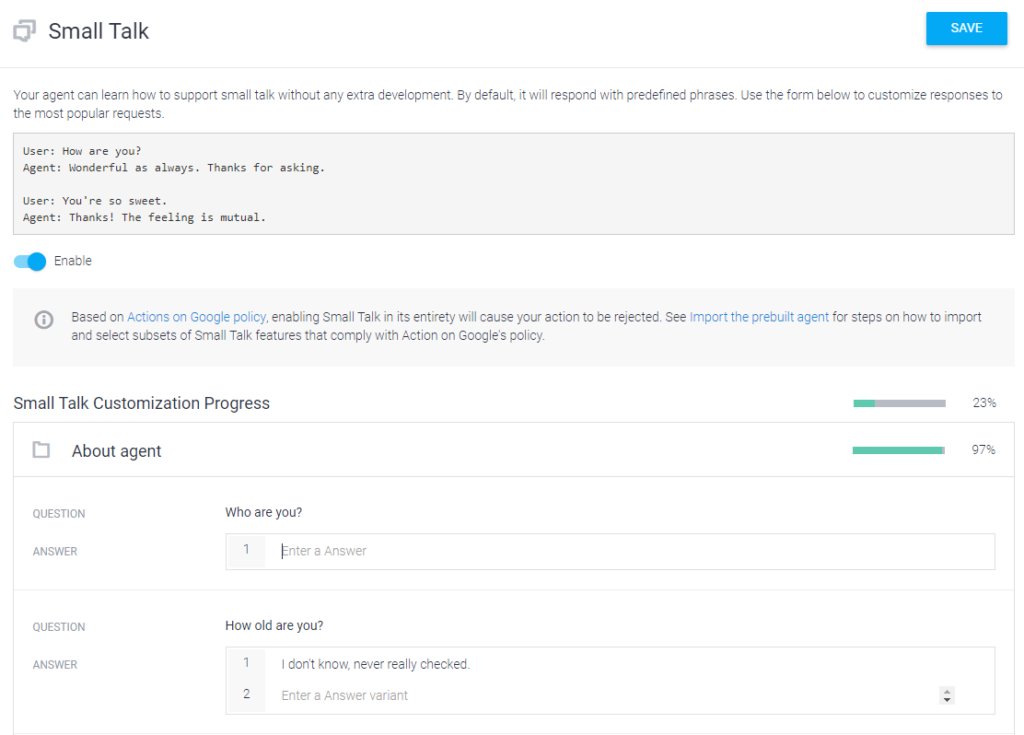

Looking forward, there are many more features of Dialogflow that we could take advantage of for future classroom activities. Dialogflow has almost 100 pre-set small talk questions and statements that it can react to with custom responses (see Picture 5). Based on this feature alone, students could each pick a topic and generate multiple answers for each one as a part of a conversation-focused classroom activity.

While providing AI-powered error correction with IPAs remains elusive, teachers could manually implement this into their IPA classroom activities. When the AI response does not match the intent of the student request, students could make a note of what they said for class analysis later. Once the error has been detected, the students could add additional training phrases to the Dialogflow intents and entities to help the AI respond more accurately next time.

Conclusion

As AI technologies progress and IPAs become ‘smarter’, tasks like the one we introduced will become more commonplace. This is an extremely powerful tool to utilize with language learners, as programming the AI demands a deep analysis of a language. As the world changes and reacts to global events, an IPA, if programmed correctly, could be a perfect conversation partner. Recent events have shown that modern technology is adaptable and can aid in overcoming obstacles such as the 2020 pandemic. AI, in particular, can easily be applied to remote learning contexts.

Resources

Dialogflow homepage and documentation: https://cloud.google.com/dialogflow/

How to get started with Dialogflow: https://marutitech.com/build-a-chatbot-using-dialogflow/

References

Dizon, G. (2017). Using Intelligent Personal Assistants for Second Language Learning: A Case Study of Alexa. TESOL Journal, 8(4), 811–830. https://doi.org/10.1002/tesj.353

Goksel-Canbek, N., & Mutlu, M. E. (2016). On the track of Artificial Intelligence: Learning with Intelligent Personal Assistants. International Journal of Human Sciences, 13(1), 592. https://doi.org/10.14687/ijhs.v13i1.3549

Luo, B. (2016). Evaluating a computer-assisted pronunciation training (CAPT) technique for efficient classroom instruction. Computer Assisted Language Learning, 29(3), 451–476. https://doi.org/10.1080/09588221.2014.963123

Moussalli, S., & Cardoso, W. (2019). Intelligent personal assistants: can they understand and be understood by accented L2 learners? Computer Assisted Language Learning, 1–26. https://doi.org/10.1080/09588221.2019.1595664

Obari, H., & Lambacher, S. (2019). CALL and complexity – short papers from EUROCALL 2019. Improving the English Skills of Native Japanese Using Artificial Intelligence in a Blended Learning Program, 327-333. https://doi.org/10.14705/rpnet.2019.38.9782490057542