Let’s Code for Languages: Integrating AI Chatbots into Language Learning

By Ann Cai, Dino Konstantopoulos, Robert Davis, Yufei Zheng, Dongying Liu, Northeastern University

Introduction of the Research

With the improvement of deep learning technologies, the Big 4 computer companies (Google, Microsoft, Facebook, and Amazon) have been developing their robotics, machine learning, and artificial intelligence (AI) sectors for the past few years, including AI chatbots, which are computer programs that simulate and process human conversation. At the same time, they are also striving to broaden the impact of their state-of-the-art technologies by incorporating them into real life and pedagogical initiatives. Thus, it is both a challenge and an opportunity for front-line researchers and educators to use AI chatbot technologies to improve the effectiveness of teaching and learning in and out of the classroom (Bonner et al., 2019; Frazier et al., 2020; Fryer et al., 2017, 2019, 2020; Bao, 2019; Sykes, 2018).

As the world changes and reacts to global events (e.g., the COVID-19 pandemic), AI conversational chatbots could be an extremely powerful tool as a way to simulate a conversational partner for language learners. They could also be easily applied to remote learning contexts. However, the quality of interacting with chatbots is limited by how they are pre-instructed by code. AI chatbots are normally used to automate simple tasks such as answering customers’ questions, providing information on products, and placing orders. However, the language learning domain demands further specifications and customizations in order to meet the needs of language learners (Bonner et al., 2019; Frazier et al., 2020).

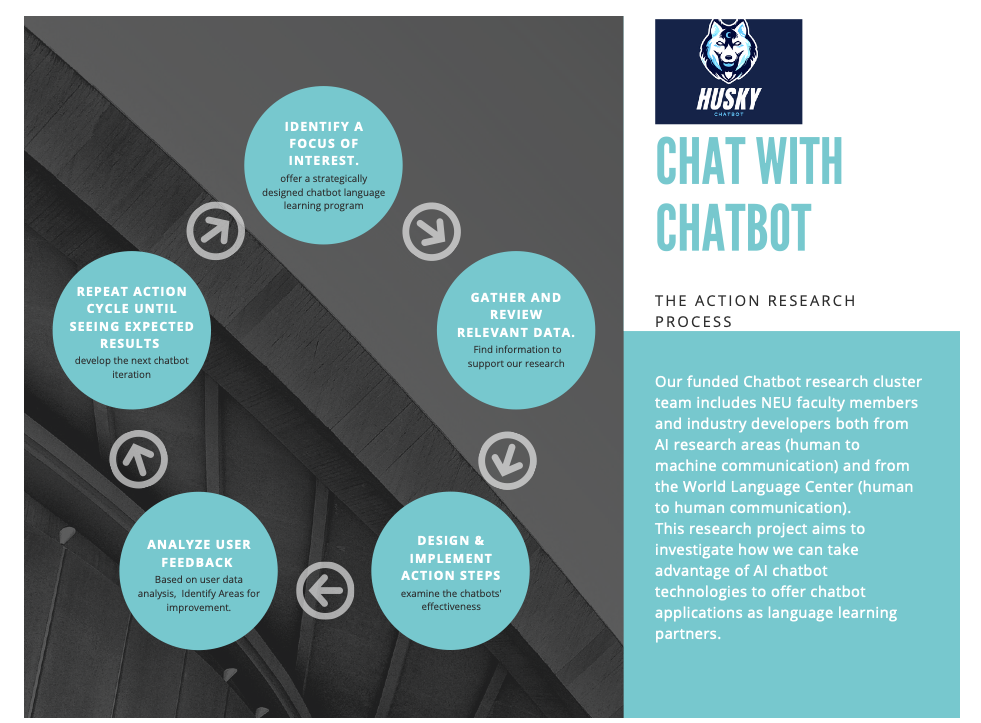

At the World Language Center at Northeastern University (NEU), we aim to have developers, researchers, and language educators work together to adopt cutting-edge technologies. Our funded Chinese Chatbot research team includes faculty members both from AI research areas (human to machine communication) and from the World Language Center (human to human communication). This research project aims to investigate how we can take advantage of AI technologies to offer chatbot applications as language learning partners.

To acquaint readers that are not familiar with chatbots, one of our customized chatbot design training videos (funded by STARTALK) in English is presented below to demonstrate:

1) An example of a chatbot conversational topic: “Names”

2) A voice input exercise

3) A text input exercise

This project channels NEU’s innovative spirit into creating chatbots that can speak in Mandarin and hold realistic conversations with Chinese learners. The bots, called Husky C-Bots, aim to help Chinese language learners practice their language skills by reviewing vocabulary and grammar constructs according to their learning material. Husky C-Bots can be accessed directly from web-based chatbot apps. A special Google Chrome extension (Dialogflow Helper) was also customized to play the text responses aloud. This encourages students to listen more attentively to audio responses, rather than solely relying on text messages.

Introduction of Husky C-Bots

Why Dialogflow

Before developing the Husky C-Bots, multiple well-known bot design platforms were compared. After weighing the options, Google Dialogflow was chosen because it supports the Chinese language via both text and voice input/output. Thus, all versions of Husky C-Bots have been created by utilizing the Google Dialogflow platform. The Dialogflow Essentials (ES) Edition is free to use.

Dialogflow Basics

Google chatbot AI-based Dialogflow is a web-based tool designers can use to create their customized two-way conversational applications, also known as agents. Agents can effectively interact with end-users.

Husky C-Bots

As previously mentioned, in the Dialogflow platform. Husky C-Bots consists of a set of three agents (HuskyChatbot1101, 1102, and 2101) as follows:

- HuskyChatbot1101 consists of 5 practical topics (in Dialogflow, topics are called intents): names, nationalities, family, making an appointment with a classmate, and overall self-introduction.

- HuskyChatbot1102 consists of 5 situational conversation topics/intents: making an appointment with a medical doctor, making an appointment with a teacher, shopping*, transportation, and asking about the weather*.

- HuskyChatbot2101 consists of 6 topics/intents: making an appointment, ordering food, asking directions, visiting a (medical) doctor, exchanging money*, renting an apartment*, and reviewing for a test.

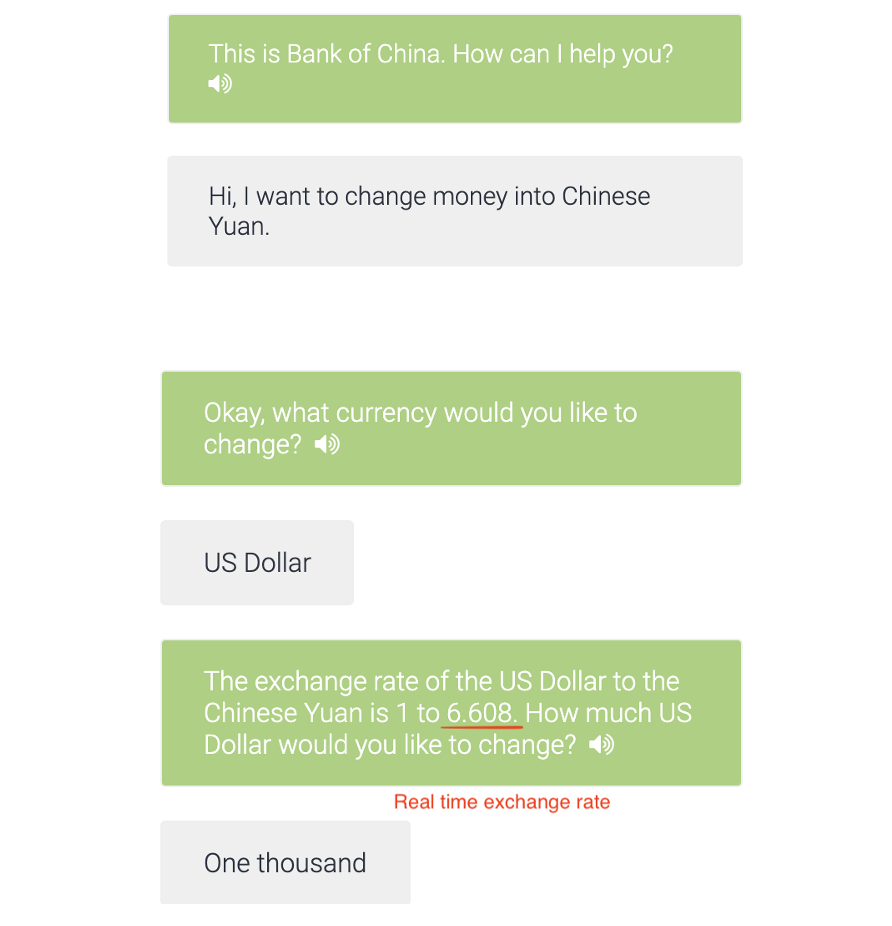

The 4 intents/topics above marked with a * retrieve real-time data, such as a weather forecast, a current money exchange rate, etc. This approach may increase the realism for students and also offers varied practice at different times. As in the example shown below, if a student needs to convert currencies in China, our AI chatbots are able to extract the data from a real-time exchange rate API (Application Programming Interface). The image below shows a dialogue example which includes the data retrieved from the currency exchange rate API.

Research Design and Development

Adopting the progressive action research approach (see Picture 3), this study: 1) offers a strategically-designed “Chat with Chatbot” learning program; 2) examines its effectiveness and identifies areas for improvement through various action cycles of user data collection; 3) develops the next chatbot iteration. The whole project has gone through several iterations since the 2020 spring semester. The research results have been achieved through the following three phases.

The First Phase

In the 2020 spring semester, we first designed a two-way conversational chatbot for Chinese learners at the intermediate level (CHNS2101), and then integrated HuskyChatbot2101 into the Google Assistant, an AI-powered virtual assistant mobile app, for students to practice Chinese on their mobile phones.

However, we encountered the following technical issues: a) the Google assistant only currently supports traditional Chinese; b) it was challenging to invoke the chatbot due to the students’ accented voice input, c) the Google assistant mistakenly provides extraneous real-life responses which are out of range of our learning context. Therefore, the students’ experience was not as positive as expected for this first rendition.

Based on students’ feedback, we immediately tested a different option and switched our user interface from the Google assistant phone app to the Dialogflow Web-Demo (web-based) integration.

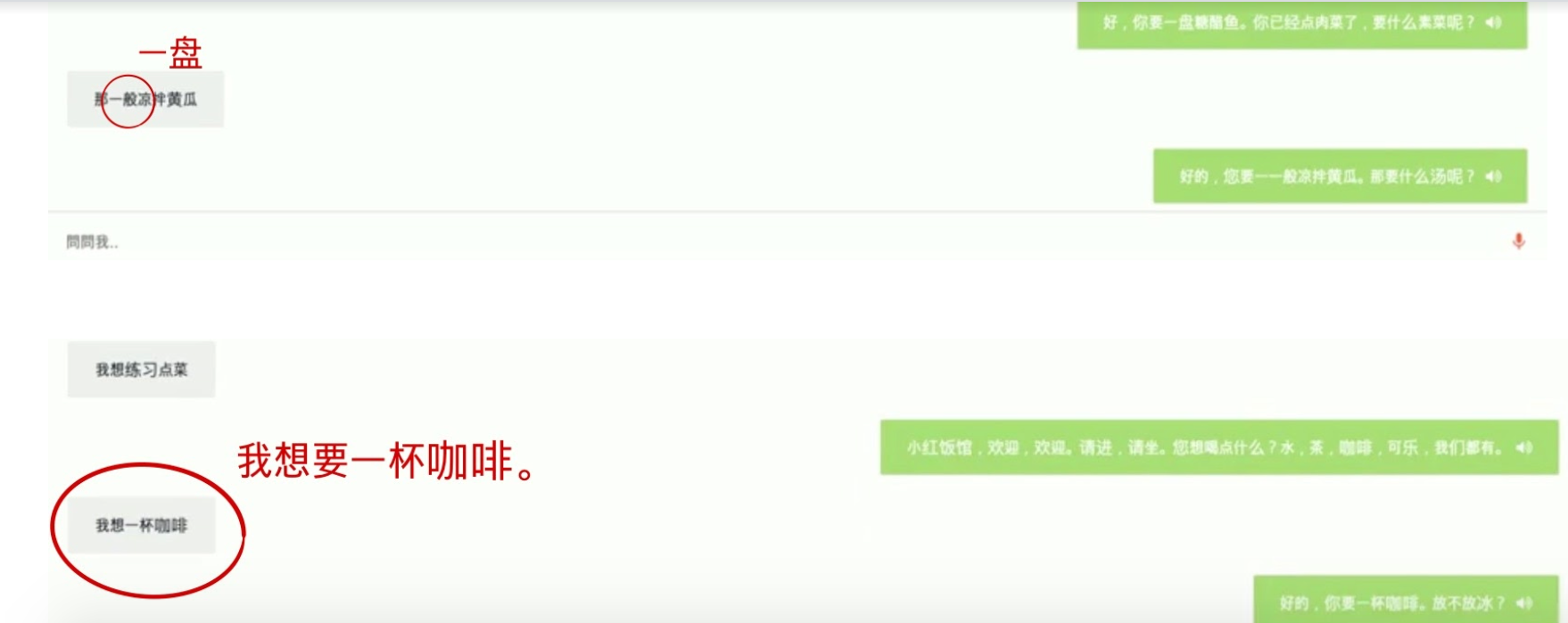

The Second Phase

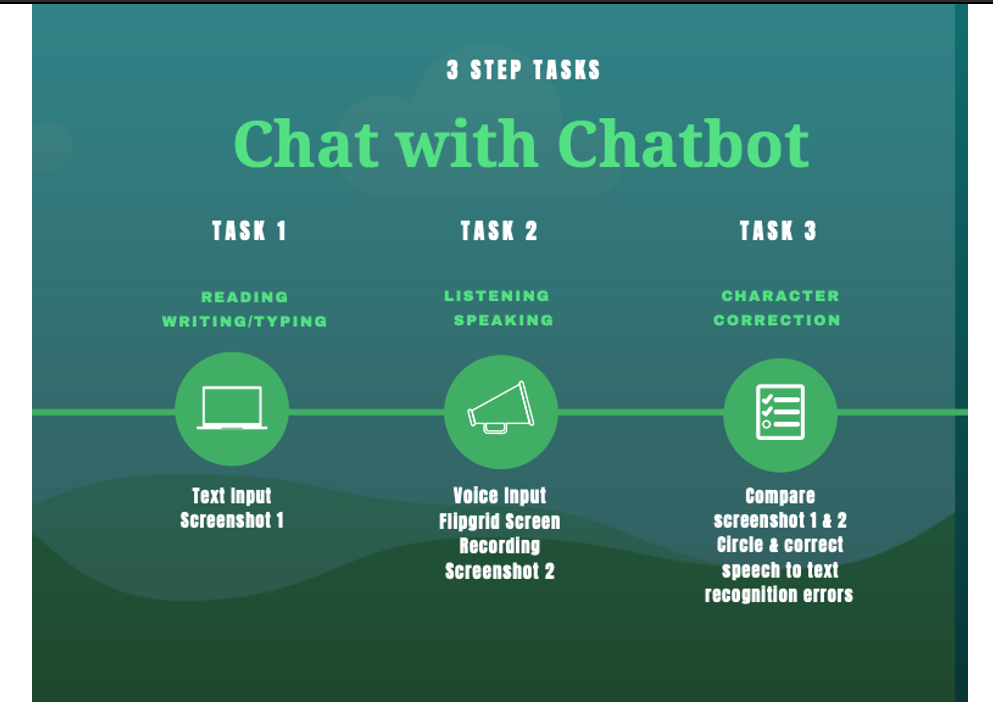

From then on, students practiced with the web-based chatbots via a set of three-step scaffolding tasks: 1) text input exercises (reading and typing) to build students’ confidence in the usability of the chatbots, 2) voice input exercises (listening and speaking), and 3) exercises in comparison and correction of the two screenshots from the previous two steps, via identifying the speech to text (STT) recognition character errors generated by the Dialogflow default recognizer from step 2 (see Picture 4 & 5). Since our research framework is based on a Progressive Action Study approach, we collected students’ feedback from 3 semesters (2020 Spring, 2020 Fall, and 2021 Spring), including: 1) each specific topic chatbot practice (for improvement purposes); 2) a general end-of-semester user experience questionnaire (15 items).

The Third Phase

In order to make chatbot conversations more realistic and promote language immersion, starting from 2020 Fall, the Dialogflow Phone Gateway was integrated into the chatbots as oral unit tests. After integration, students could call a telephone number to speak with the chatbots over a simulated phone call, similar to automated bank call centers.

Student Feedback

At the end of each semester (three semesters total), 65 students participated in a 15-item questionnaire to gather data related to the following research questions:

- What percentage of language learners are willing to interact with chatbots as part of their language learning?

- Do students find that their interactions with AI chatbots have helped them gain proficiency in the target language?

- What are the advantages and disadvantages of human-machine interactions versus human-human interactions in foreign language learning?

Here are the emerging themes generated from the student feedback:

Increased Acceptability

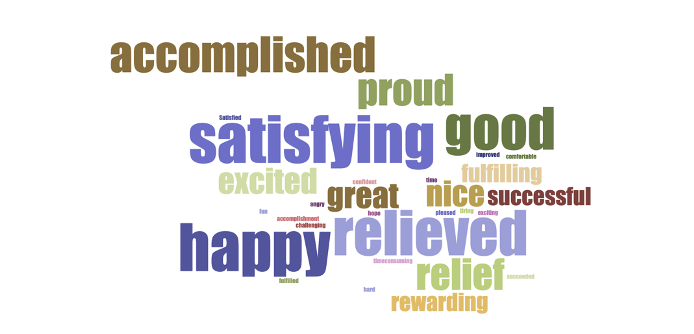

The majority of students (75%) showed a willingness to practice with the chatbots, while some students indicated their initial frustration due to the chatbots’ inability to recognize their accented Chinese speech. Based on our progressive action research framework, we have been constantly improving the quality of the user experience within and after each semester (as discussed in the takeaways section below). As a result, students eventually recorded positive feedback. The following word cloud demonstrates the sense of achievement generated by students after successfully practicing with the chatbots.

Improved Language Skills

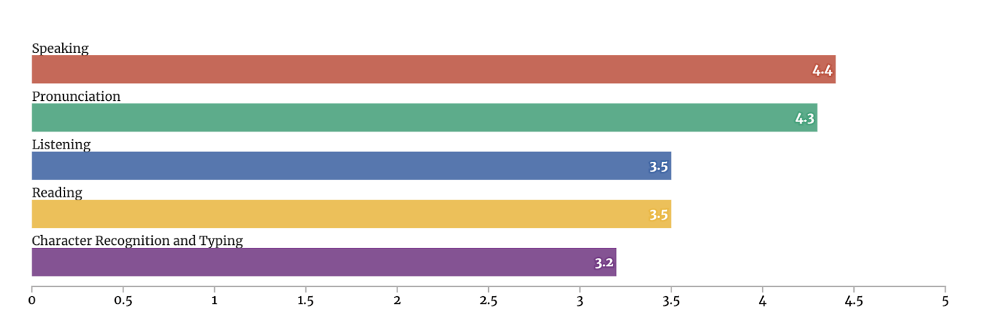

We collected quantitative data (see Picture 6), on a scale of 1 to 5, on the students’ perceptions about whether the chatbots helped them to improve these language skills: pronunciation, speaking, listening, reading, and character recognition & typing. The self-assessment results showed that:

1. Improvement of speaking and pronunciation was rated highest. Some students’ comments were:

- “Speaking was the area that I felt benefited the most from the chatbot. The best way to practice speaking is to actually do it, and the chatbot is a great way of achieving that.”

- “As this was the only real way to practice speaking outside of class and as the bot grew smarter it allowed me to practice more efficiently.”

- “This was really important for me since I think I improved a lot in my tones and clarity in my words. The chatbot is quite sensitive to the user’s tone and pronunciation.”

2. Listening skills improved through the audio responses. This is complicated by the fact that the chatbot provided the text both as audio and as written text simultaneously. Comments included:

- “I can practice speaking Chinese by myself, but I don’t get a response if I do it alone. However, the chatbot does give a response and even asks questions. I can practice speaking and listening at the same time.”

- “It helped my listening, and it was useful to have the option to have it repeat itself.”

- “I got significantly better at reading so sometimes I would just read what it said instead of listening to it.”

3. Reading, character recognition and typing were also strengthened, although they were not the main focus of the research project.

- “When I do the text-input chatbot practice, I get to practice typing and recognizing Chinese characters. I love how the chatbot has so many features.”

- “While it’s not the primary thing I focus on while using the chatbot, I like to follow along with the words as they are read, and it helps the vocabulary/characters stick.”

- “I would have to read and listen at the same time which helped me understand what I was reading. And I had to get good at character typing to keep up with the conversation.”

Embraced Human-Machine Interaction

Students appreciated that the chatbots provided unlimited practice opportunities, less pressure, and less judgment. However, students also suggested that the chatbots may perform better if they had human-like interactions, corrective feedback, more diverse topics, and less technical issues on accented voice recognition. Although fully recognizing the trade-off of the chatbots, students still embraced this new learning model.

Takeaways

AI chatbots could be not only a useful conversational partner for foreign language learners but also a powerful tool for remote learning. However, there is still room to improve the quality of interacting with current chatbots. In the following section, three issues encountered in the research project will be addressed.

Unnecessary Restarts and Added Fallback Intents

Based on the research team’s observations, Husky C-Bots sometimes stopped processing after receiving a few unrecognizable attempts, forcing students to restart the conversation from the beginning. This wasted time and frustrated students. To lessen this problem, the team has modified the chatbots to ask users to answer the question again to avoid unnecessary restarts.

Pause Issues and Possible Solution

When our second language students practice with the chatbots, they tend to pause in the middle of sentences (Frazier et al., 2020). However, the Google Dialogflow default recognizer automatically submits unfinished messages. To solve this issue, the team suggested that the users install the Google Chrome extension titled Voice in Voice Typing, which converts speech into text, to help complete their voice messages before submitting into chatbots.

Challenges of Accented Speech Recognition

Based on students’ feedback, Husky C-Bots’ partial inability to recognize accented speech could discourage language learners. Sometimes, Dialogflow’s default speech to text (STT) recognizer cannot even understand a native speaker. For example, when the end-users utter simple Chinese words, such as “Lesson 10” (di4 shi2 ke4 / 第十课), due to the STT recognition errors, chatbots sometimes mistakenly recognize this phrase as its homophonic word of “When” (de shi2 ke4 / 的时刻). Thus, the low STT recognition accuracy rate might prevent end-users from practicing with chatbots effectively.

The research team has been exploring two possible solutions to improve accented speech recognition. First, Voice Recognition Input Tools (VRITs) help recognize speech by filtering the users’ words before inputting them into a Google Dialogflow-based chatbot. In this research, a recorded set of multiple accented speeches from non-Chinese speakers were used to test the VRITs’ recognition accuracy. Some VRITs, such as Baidu, Xunfei (standardized Chinese accent recognition tools), and Baidu-Henan accent VRITs were tested and compared to the Google Dialogflow’s default recognizer (the Chinese-Henan province accent has less tonal range compared to Standardized Chinese-Beijing accent, so hypothetically, this VRIT might have better recognition accuracy for non-tonal foreign accents). The results showed that these three VRITs have similar, or even better, recognition accuracy rate in comparison with the Dialogflow one.

Second, the research team has been investigating whether the accuracy rate can be improved by building their own Machine Learning (ML) models to integrate them later into Dialogflow. The team has been training the models by inputting the dataset of foreign accented Chinese speech samples. For example, the team can adjust their parameters to change the audio waveforms from tonal to less tonal, which better resembles the features of English accented Chinese speech samples. Some state-of-the-art augmentation tools allow the team to grow their training data by synthesizing accented speech datasets.

Future Directions

As mentioned before, during the progressive action research, the team has been collecting the accented STT recognition error dataset generated by American students when interacting with the Chinese chatbots. The team will continue to train the customized ML models with this growing dataset to see whether the accented STT recognition accuracy rate can be improved in the near future.

Previously, in order to attract students more, the team has attempted to incorporate their chatbots into an actual concrete robot, Zenbo Jr., created by Asus, a Taiwanese multinational computer company. With the goal of being more flexible, compatible, and eco-friendly, the team is further planning to develop their own virtual humanoid face capable of responding to conversational flows.

Conclusion

This pioneering project gives language learners the opportunity to simulate communicating with native speakers. Based on experience, the team not only created pre-designed chatbots for students to practice Chinese, but further produced a series of step-by-step YouTube training videos to teach users to create chatbots by themselves. Then they can easily design chatbots to meet their own needs in any language by using the free Dialogflow Essentials (ES) Edition. As a result, this innovative approach can be easily adopted by teachers and students to customize their own language materials to promote immersive, self-paced learning.

This interdisciplinary approach explores the untapped possibilities in language learning and tackles some of the critical challenges remote learning is facing. This research project harnesses AI communicative chatbots as supplemental tools to supply the sufficient requirement of oral practice. This approach may be potentially adopted as a prototype by other foreign language educators and learners to great effect.

Acknowledgements

This paper and the research behind it would not have been possible without exceptional support and valuable contributions from a number of individuals and organizations throughout the entire research and writing process. We sincerely extend our heartfelt appreciation to all of the following:

Dr. Stacey Katz Bourns, Director, World Languages Center, Northeastern University (NEU)

NEU’s Humanities Center for providing the Collaborative Research Cluster Grant

The “Chat with Chatbot” research team members: Xinru David He, Peiying Li, Wanru Shao, Xiaoyu Fan, William Cui, DQ Qiang Dong & Lillian Tsai.

Dr. Vanessa Wei, Director of the Summer Startalk Chinese Program, NEU

Asus AI Group Leaders: Peiwen Hsu & Ray Lai

References

Bao, M. (2019). Can Home Use of Speech-Enabled Artificial Intelligence Mitigate Foreign Language Anxiety–Investigation of a Concept. Arab World English Journal (AWEJ) Special Issue on CALL, (5).

Bonner, E., Frazier, E., & Lege, R. (2019, June). Hey Google: Create an AI assistant-based classroom activity to enhance language learning. JALTCALL 2019, Tokyo, Japan.

Frazier, E., Bonner, E., & Lege, R. (2020). Creating Custom AI Applications for Student-Oriented Conversations. The FLTMAG, November 2020. https://fltmag.com/creating-custom-ai-applications-for-student-oriented-conversations/

Fryer, L. K., Ainley, M., Thompson, A., Gibson, A., & Sherlock, Z. (2017). Stimulating and sustaining interest in a language course: An experimental comparison of Chatbot and Human task partners. Computers in Human Behavior, 75, 461–468. Retrieved from https://doi.org/10.1016/j.chb.2017.05.045

Fryer, L. K., Nakao, K., & Thompson, A. (2019). Chatbot learning partners: Connecting learning experiences, interest and competence. In Computers in Human Behavior (Vol. 93, pp. 279–289). https://doi.org/10.1016/j.chb.2018.12.023

Fryer, L. K., & Coniam, D. (2020). Bots for language learning now: Current and future directions. Language Learning & Technology, 24(2), 8-22.

Sykes, J. M. (2018). Digital games and language teaching and learning. Foreign Language Annals, 51(1), 219-224.