VoiceThread and Universal Design for Learning

By Steve Muth, President, Product Development, VoiceThread and Layla Arnold, Account Manager, Integration Specialist, Distance Trainer at VoiceThread, LLC.

By Steve Muth, President, Product Development, VoiceThread and Layla Arnold, Account Manager, Integration Specialist, Distance Trainer at VoiceThread, LLC.

Looking back 10 years to VoiceThread’s first attempts to get people to communicate online in a more human-centric way, we know our understanding of accessibility was very rudimentary. Like many accessibility newbies, we thought that accessibility-via-a-checklist was how it was done: add alt-tags to images, work with screen-readers, etc. We were so wrong.

It turns out that simple access, while obviously a starting point, is not enough. Meaningful usability for all users is a better standard, and it’s the lens we use for all of our design work. How do you define meaningful usability? Well first, you try not to. If you listen, your users will define it for you.

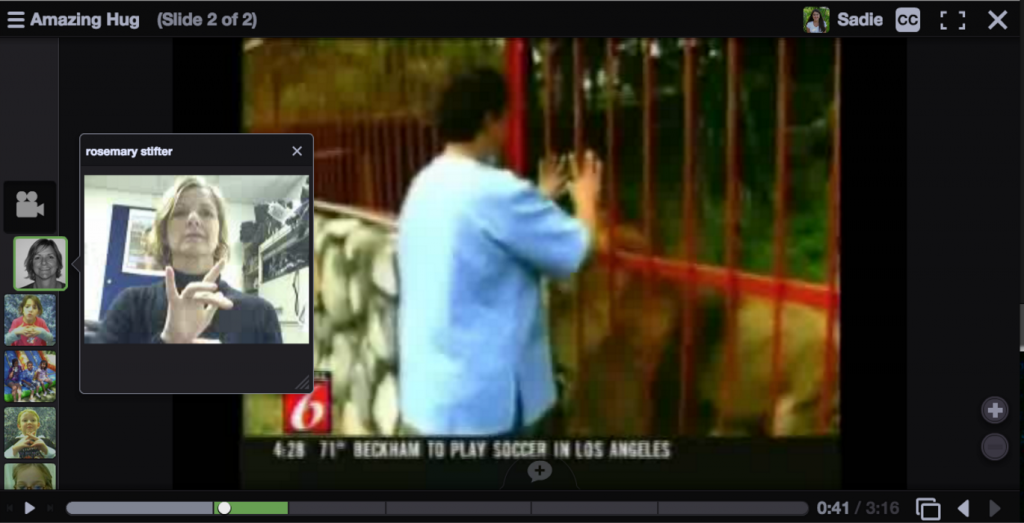

We heard from a teacher at Gallaudet University, a school for the deaf and hard of hearing, who wanted her students to be able to use sign language to make comments in an online conversation about a video. Because her young students were deaf pre-writers, our text comment feature was their only option, and it was forcing them to work through a scribe. This workflow technically provided a means of “access” but didn’t provide that rich and complex presence that comes naturally with in-person communication.

Despite originally thinking we would not offer webcam commenting, we added the feature once we were aware of what it would afford to this one user group. As soon as we saw the students signing their comments, it was clear how important it was. Their faces and movements conveyed all the layers of emotion and individuality that led us to create VoiceThread in the first place. Technically, these students were already being served by text commenting via a scribe, yet so much had been missing!

SCREEN READERS

Our first big accessibility project was to create a version of VoiceThread that blind or visually impaired students and others who depend on screen readers could use. VoiceThread conversations had always had a significant visual component, so this was a big challenge. We could have just added alt-tag information to all the visual elements and called it a day, but during testing we quickly realized what a poor experience that delivered. It was better than no alt-tags, but it was not meaningfully useful. Rather than creating a cumbersome yet accessible experience in our existing application, our approach was to build a completely separate version of the platform called VoiceThread Universal.

VT Universal is completely stripped of any visual “noise” and contains a bare minimum amount of text. This allows a screen reader to dive straight into the content of the page without having to wade through extraneous information. Screen reader users can interact with VoiceThreads using only keyboard commands. They can listen to all of the comments, even text, and record audio or text comments in response. This is simply a new way of viewing the same VoiceThread content, so a student using Universal can interact seamlessly with a student using the standard version.

We continue to expand the features of VT Universal. The next steps will be to enable secure sharing and more advanced conversation management on a VoiceThread.

CLOSED CAPTIONING

The logical next step for us was closed captioning. VoiceThreads, especially those used in language instruction, often contain audio and video content that must be made available to deaf and hard-of-hearing students. The text comment feature is not always enough. This project proved even more challenging because of the spontaneous and interactive nature of a VoiceThread. There is no single video at the core of a VoiceThread. It could be dozens or even hundreds of small videos or audio clips, each of which was recorded by a different student or instructor at different times to build a larger conversation. Each would have to be captioned individually. We’ve broken this project into multiple phases.

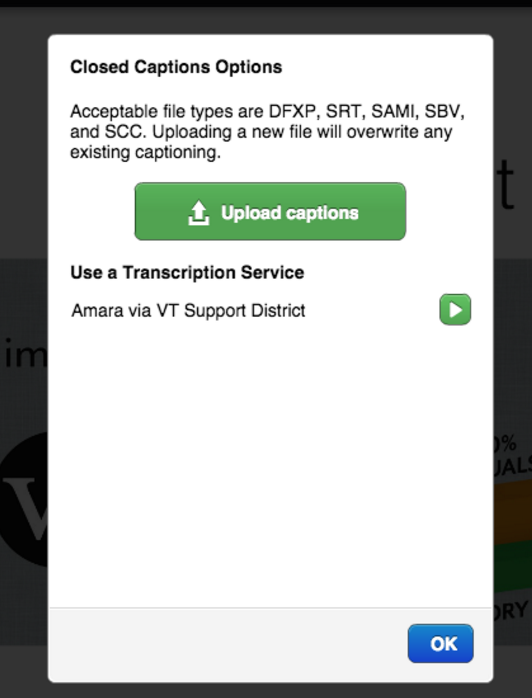

First, we enabled captioning of audio and video content on VoiceThread slides themselves. This was given priority because that “central media” content tends to be where the core instructional material is housed. By law this content would definitely need to be captioned. The comments are the more spontaneous interaction with and between students, and the requirements there can be looser. The ability to upload a user-created caption file for that central media was introduced in early 2013.

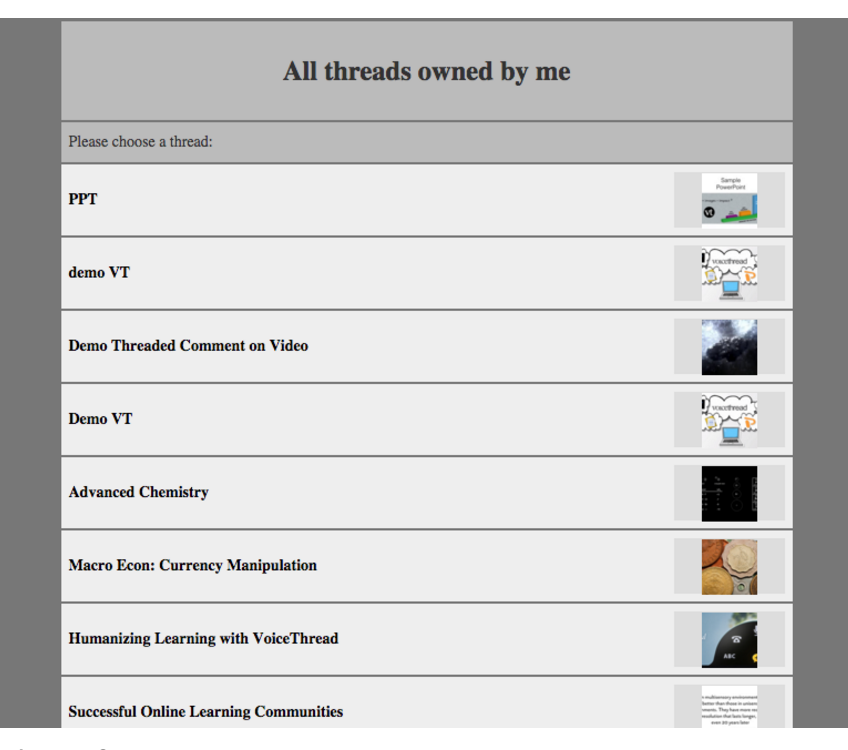

Once we met that minimum requirement, the next step was to introduce captioning of comments. The challenge here was not only to make sure each individual comment could be captioned, but also to make sure that the correct people had permission to caption them. We couldn’t allow just anyone to caption any comment; this had to be limited to the people who created the comments or the instructors leading the course. This solution, like VT Universal and captioning of central media, was designed and built with our existing team and their intimate knowledge of VoiceThread’s permissions and infrastructure. In June 2015, the ability to upload user-created caption files for comments was released.

It also became clear that while creating caption files outside of VoiceThread meets the requirements for accessibility, this is not a great user experience. We always strive to go beyond the minimum expectations to make VoiceThread a positive addition to a course rather than a burden. Instructors want caption files to be created automatically. This was not something that could be built by our team, so our solution was to engage with third parties. At the same time that we introduced captioning of comments, we started offering direct integration with the third-party captioning services 3Play Media and Amara. Institutions that have subscriptions to these services can tie those subscriptions into VoiceThread to enable professional captions of specific media assets without instructors’ having to build them manually. Instructors simply request captions for specific slides or comments as needed. The institution’s subscription covers the cost of those professional captions.

The next steps for closed captioning will involve continued engagement with third party services. We’ll integrate with more and more third-party caption generators. In addition, we are exploring ways to create automated captions that utilize voice-to-text. This would help institutions that do not have access to subscriptions with captioning services. Simple voice-to-text isn’t enough because having captions with mistakes can be just as insufficient, legally speaking, as having no captions at all. The challenges here are not only to find a reliable voice-to-text service, which is in itself a rapidly developing technology, but also to integrate with a platform that syncs transcriptions with audio/video and allows users to correct mistakes made by the automated generator, much like what YouTube offers. We’re looking forward to offering this solution for those who cannot create caption files themselves or subscribe to a service that does.

MOBILE ACCESS

In addition to addressing those traditional needs, we continue to expand the accessibility of VoiceThread in as many locations as possible. This effort began with an iOS mobile app in October 2011 and was followed by an Android app in November 2014. Students don’t always have access to computers, but most have access to smartphones. This is another step toward universal accessibility.

Accessibility is more complex than you think

It’s easy to only think about blind or deaf and hard of hearing students when considering online accessibility, but the accessibility story is much more complex than that. Cognitive differences, financial concerns, and network access issues can present truly difficult hurdles for many users, and they are all important. Whether an accommodation need arises from a diagnosed condition or simple difference of circumstance doesn’t really matter to that person who needs it.

Online instruction, whether solely online or augmenting a traditional class, is no longer new or cutting edge. It will be a permanent fixture of the learning journey of every kindergartner today. To effectively serve so many different types of people, a communication and collaboration tool needs to consider important details that most people will never notice.

Despite being rich in audio and video content, VoiceThreads require relatively little bandwidth to function. Even a dial-up connection or 3G data plan will allow students to VoiceThread successfully, wherever and whenever it is convenient for them. In addition, the ability to record an audio comment using a standard telephone helps those who don’t have access to microphones or upload speeds that could handle audio recording. Providing users 5 different ways to comment, not one or two, affords people the ability to participate in whatever way serves them best. Choice should be a critical component of any platform focused on accessibility.

Our design process begins with feedback from the amazingly diverse VoiceThread community about what new features will help them work and connect with others in more human-centric way. We now understand that accessibility isn’t something we can address once and move on; it’s a permanent part of our work process, just as it should be.